前言

持久化卷(Persistent Volume, PV)允许用户将外部存储映射到集群,而持久化卷申请(Persistent Volume Claim, PVC)则类似于许可证,使有授权的应用(Pod)可以使用PV。

-

持久化卷(Persistent Volume,PV)。

-

持久化卷申请(Persistent Volume Claim,PVC)。

-

存储类(Storage Class,SC)。

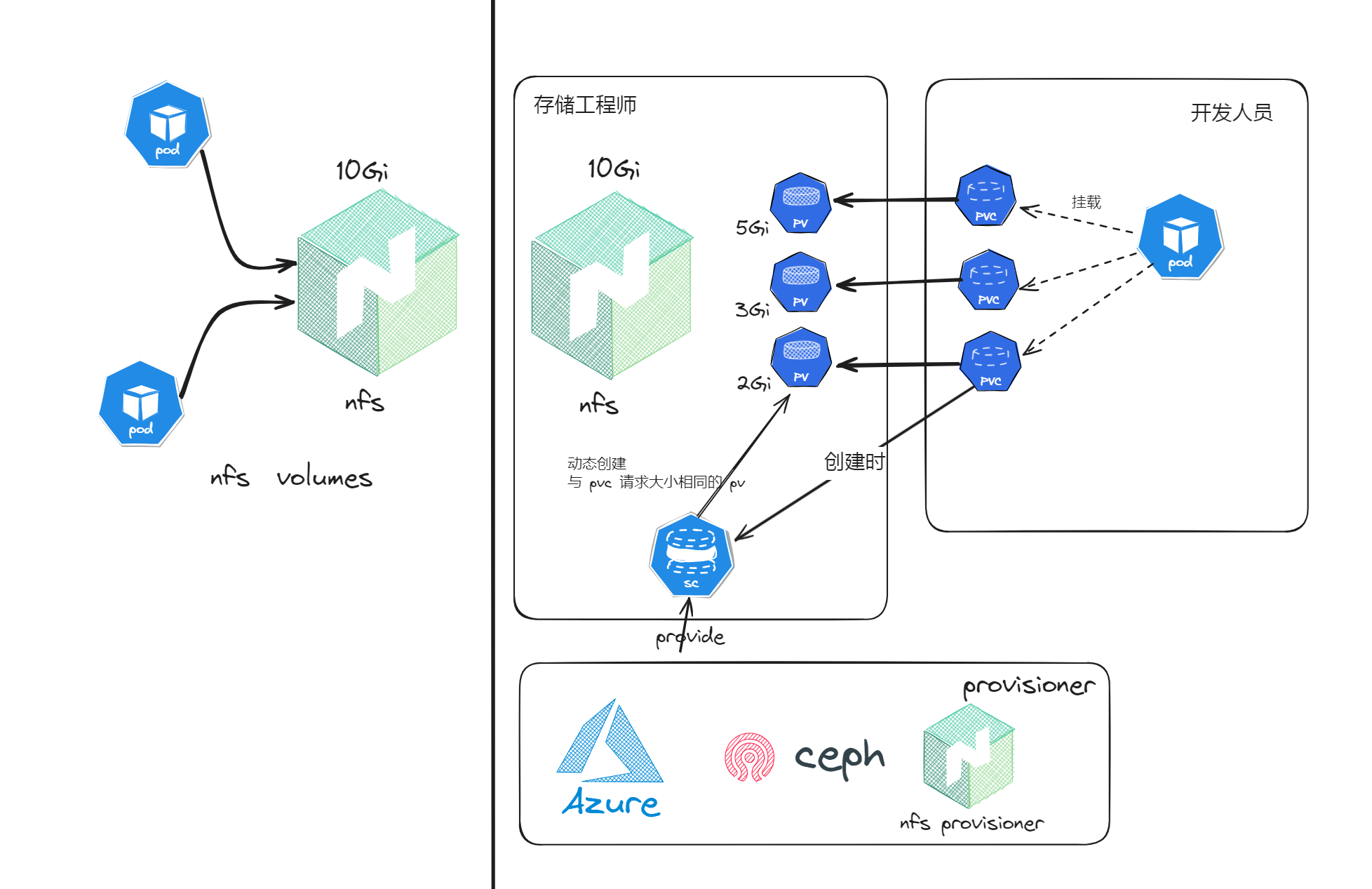

概括地说,PV代表的是Kubernetes中的存储;PVC就像许可证,赋予Pod访问PV的权限;sc则使分配过程是动态的。

集群环境

操作系统: Ubuntu 20.04

| IP | Hostname | 配置 |

|---|---|---|

| 192.168.254.130 | master01 | 2C 4G 30G |

| 192.168.254.131 | node01 | 2C 4G 30G |

| 192.168.254.132 | node02 | 2C 4G 30G |

| 192.168.254.133 | nfs-tools | 2C 4G 30G |

nfs 环境搭建

安装 nfs

sudo apt-get install -y nfs-kernel-server # 安装 NFS服务器端

sudo apt-get install -y nfs-common # 安装 NFS客户端

nfs服务器端,只在集群中某一台安装即可

创建nfs共享目录

mkdir -p /nfs/share

chmod -R 777 /nfs/share

修改配置 vim /etc/exports

# 当登录NFS主机使用共享目录的使用者是root时,其权限将被转换成为匿名使用者,通常它的UID与GID都会变成nobody身份,添加no_root_squash参数,确保root账户能用

/nfs/share *(rw,sync,no_root_squash) # * 表示允许任何网段 IP 的系统访问该 NFS 目录

配置生效

exportfs -r

exportfs # 查看生效

启动服务

sudo /etc/init.d/nfs-kernel-server restart

mount -t nfs 192.168.254.130:/nfs/share /nfsremote -o nolock

- 如果提示

mount.nfs: access denied by server while mounting

>

> 在服务器端 ``/etc/exports中加入insecure> > 如:/nfs/share *(insecure,rw,sync,no_root_squash)`

实例数据

echo "hello world" > /nfs/share/index.html

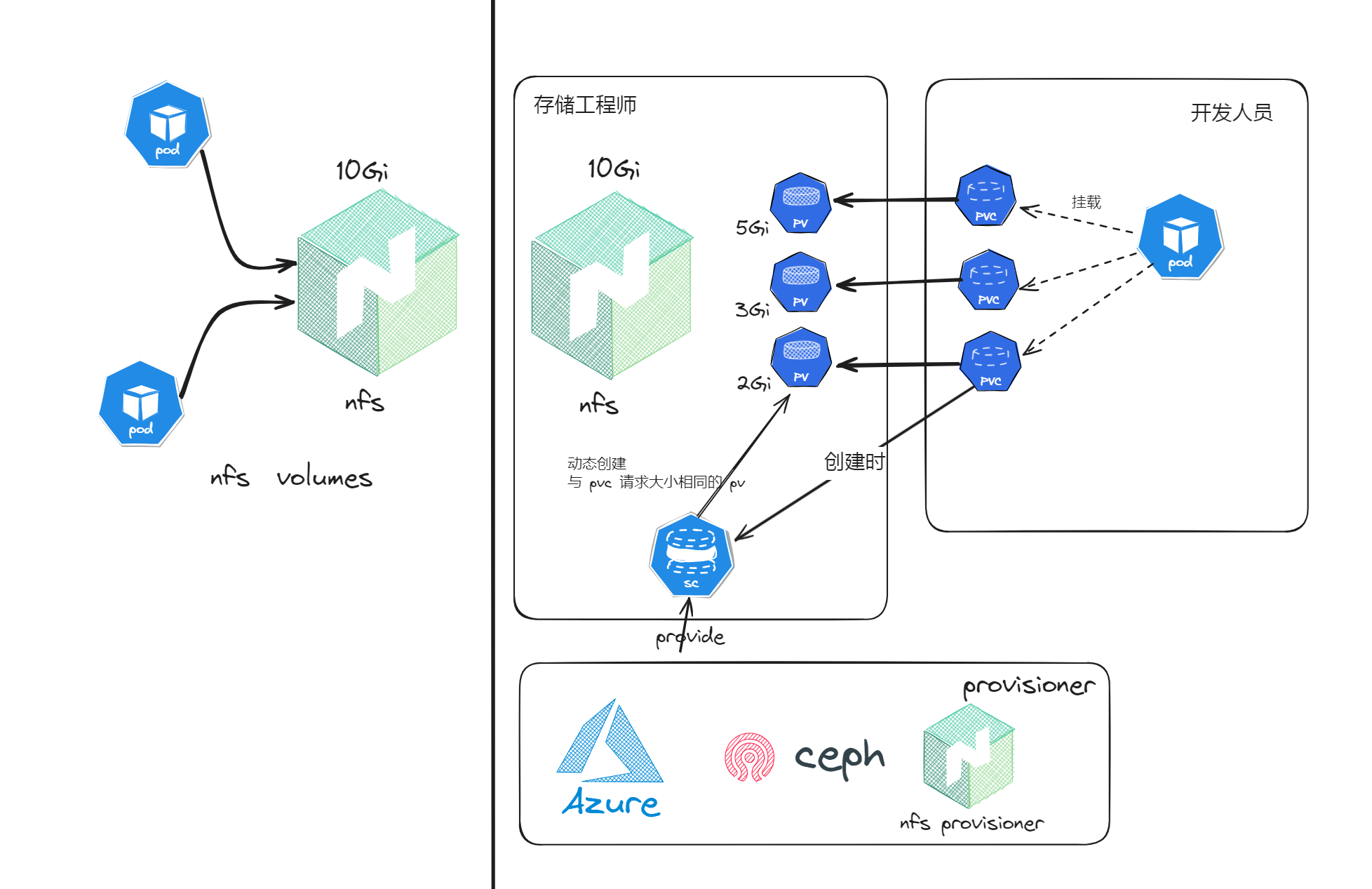

pod 挂载 nfs

架构图

pod_nfs.yaml:

apiVersion: v1

kind: Pod

metadata:

name: nfs-testpod

labels:

app: nfs-testpod

spec:

containers:

- name: nfs-testpod

image: nginx

imagePullPolicy: IfNotPresent

securityContext:

privileged: true

ports:

- containerPort: 80

hostPort: 8080 # 将该端口暴露在主机的 8080

volumeMounts:

- mountPath: /usr/share/nginx/html

name: datadir

restartPolicy: Always

volumes:

- name: datadir

nfs:

server: 192.168.254.133

path: /nfs/share

验证结果:

$ k create -f pod_nfs.yaml

$ k get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-testpod 1/1 Running 0 16m 10.244.196.134 node01 <none> <none>

$ curl node01:8080

hello world

pvc 方式挂载 nfs

架构图

pod_nfs_pv_pvc.yaml:

# pv 分配资源

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv01

labels:

pv: nfs-pv01

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

# 挂载 nfs

nfs:

path: /nfs/share

server: 192.168.254.133

---

# pvc 向一个 pv 去请求资源

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc01

labels:

pv: nfs-pvc01

spec:

# 向 pv 请求的存储大小

resources:

requests:

storage: 500Mi

accessModes:

- ReadWriteMany

# 通过 label 选择 pv

selector:

matchLabels:

pv: nfs-pv01

---

apiVersion: v1

kind: Pod

metadata:

name: nfs-pv-pvc-pod

labels:

app: nfs-pv-pvc-pod

spec:

containers:

- name: nfs-pv-pvc-pod

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

hostPort: 8081 # 暴露 8081

volumeMounts:

- mountPath: /usr/share/nginx/html

name: datadir

restartPolicy: Always

volumes:

- name: datadir

persistentVolumeClaim:

claimName: nfs-pvc01

验证结果:

$ k get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-pv-pvc-pod 1/1 Running 0 97s 10.244.196.135 node01 <none> <none>

nfs-testpod 1/1 Running 0 16m 10.244.196.134 node01 <none> <none>

$ k get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

nfs-pv01 1Gi RWX Retain Bound default/nfs-pvc01 <unset> 8s

$ k get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

nfs-pvc01 Bound nfs-pv01 1Gi RWX <unset> 10s

$ curl node01:8081

hello world

注意: 1 个 pv 能绑定一个 pvc

storageclass 方式动态申请 pv

架构图

sc 要方式动态申请 pv, 需要一个与存储对应的 provisioner, 可以是云服务器的 provisioner. 参考

我们使用的是 nfs, 所以需要 nfs-provisioner

安装 nfs-provisioner

本次使用的是 nfs-provisioner 是 nfs subdir

# 通过 helm 安装, 安装 helm

wget https://get.helm.sh/helm-v3.7.0-linux-amd64.tar.gz

tar zxvf helm-v3.7.0-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/

$ helm repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/

$ helm install nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner \

--set nfs.server=192.168.254.133 \

--set nfs.path=/nfs/share

# 也可以 helm pull nfs-subdir-external-provisioner/nfs-subdir-external-provisioner 后修改 values 的值

# 推荐 helm pull 下来后修改镜像地址 aifeierwithinmkt/nfs-subdir-external-provisioner (无法拉取镜像, 我推到 dockerhub的镜像)

NAME: nfs-subdir-external-provisioner

LAST DEPLOYED: Sun Jan 28 15:39:16 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

# 查看 pod

$ k get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-pv-pvc-pod 1/1 Running 0 50m 10.244.196.135 node01 <none> <none>

nfs-subdir-external-provisioner-f8db66c64-cgpqk 1/1 Running 0 52s 10.244.196.137 node01 <none> <none>

nfs-testpod 1/1 Running 0 65m 10.244.196.134 node01 <none> <none>

# 我们需要从 pod 中获取 provisioner 的地址(pod 的环境变量), 用作 sc 的 provisioner 地址

k describe po nfs-subdir-external-provisioner-f8db66c64-cgpqk | grep PROVISIONER_NAME

pod_nfs_sc_pvc.yaml:

# 构建 storageclass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-sc

provisioner: cluster.local/nfs-subdir-external-provisioner

mountOptions:

- nfsvers=4

#parameters:

# server: nfs-server.example.com

# path: /share

# readOnly: "false"

---

# pvc 向一个 pv 去请求资源

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc02

labels:

pv: nfs-pvc02

spec:

# 向 pv 请求的存储大小

resources:

requests:

storage: 500Mi

accessModes:

- ReadWriteMany

# 指定 storageclass

storageClassName: nfs-sc

# 通过 label 选择 pv

# selector:

# matchLabels:

# pv: nfs-pv01

---

apiVersion: v1

kind: Pod

metadata:

name: nfs-sc-pvc-pod

labels:

app: nfs-sc-pvc-pod

spec:

containers:

- name: nfs-sc-pvc-pod

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

hostPort: 8082

volumeMounts:

- mountPath: /usr/share/nginx/html

name: datadir

restartPolicy: Always

volumes:

- name: datadir

persistentVolumeClaim:

claimName: nfs-pvc02

验证结果:

$ k get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-sc-pvc-pod 1/1 Running 0 10s 10.244.196.138 node01 <none> <none>

$ k get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

nfs-pvc02 Bound pvc-7ebac745-38f2-4ea7-8d18-0465f6fdf2d1 500Mi RWX nfs-sc <unset> 2m4s

$ curl node01:8082

hello world